Search AI Products and News

Explore worldwide AI information, discover new AI opportunities

- ✓AI News

- AI Tools

2025-07-25 17:23:47.AIbase.

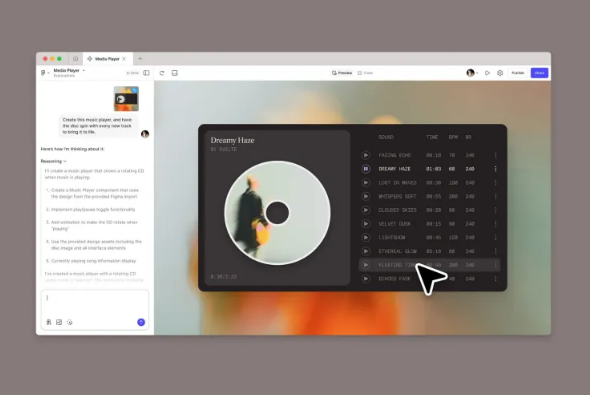

Trickle Magic Canvas Launch: No-Code! Co-create Production-Grade Applications with AI, Revolutionizing the Future of Development!

2025-07-25 15:47:55.AIbase.

Tesla Emphasizes the Safety of Assisted Driving: AI Hardware Support

2025-07-25 15:40:29.AIbase.

Memories AI Launches the World's First Artificial Intelligence Visual Memory Model and Secures $8 Million in Seed Funding

2025-07-25 14:35:05.AIbase.

MyShell ShellAgent 2.0 Launch: Create an App with One Sentence, the AI Revolution Without Frontend is Coming

2025-07-25 11:44:06.AIbase.

Zhejiang University Alumni Launch an AI Code Testing Tool, Create a Bug-Free Website in 30 Minutes

2025-07-25 10:38:24.AIbase.

Figma Make Opens to All Users: AI-Powered Design, Efficiency Within Reach

2025-07-25 10:37:17.AIbase.

Google Lab's Powerful New Product Opal: No-Code! Build AI Applications with Natural Language to Unlock Future Productivity

2025-07-25 10:33:27.AIbase.

One Third of Americans Use AI Tools to Seek Career Transitions

2025-07-25 10:26:51.AIbase.

Nanyang Technological University Collaborates with Shanghai AI Lab to Release PhysX-3D: Infusing Physical Soul into AI-Generated 3D Models!

2025-07-25 10:23:35.AIbase.

Google Releases AI Application Building Tool Opal: Create AI Applications with Natural Language Without Coding

2025-07-25 10:03:07.AIbase.

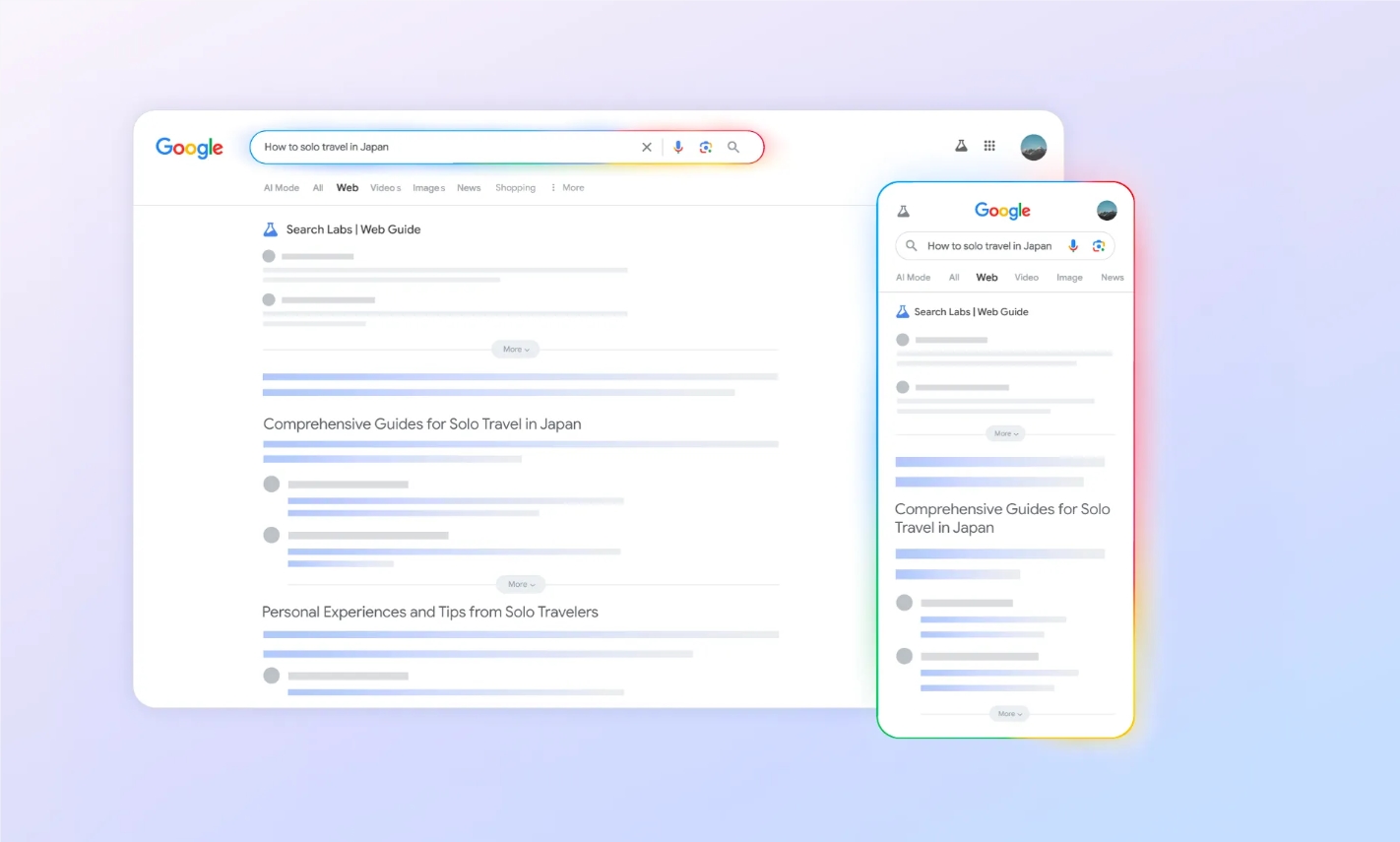

Google says: As long as AI content is compliant, the basic principles of SEO still apply

2025-07-25 09:56:30.AIbase.

White House Orders Tech Companies to Limit 'Awakened AI' Aimed at Promoting Political Control

2025-07-25 09:55:03.AIbase.

1 Day to Launch a Custom AI? Ant Treasure Box Intelligent Entity Enterprise Version Released with Payment and Marketing MCP

2025-07-25 09:54:56.AIbase.

Where is SEO Heading in the AI Era? Google Experts Reveal the Future of Search, Traditional Rules Remain the Foundation

2025-07-25 09:51:23.AIbase.

Google Launches AI-Powered Web Guide to Redefine the Search Experience

2025-07-25 09:49:04.AIbase.

Anthropic Launches Audit Agent to Aid in AI Model Alignment Testing

2025-07-25 09:38:50.AIbase.

Industrial AI Star CVector Secures $1.5 Million in Funding! Former Shell Engineer Builds an Industrial Brain to Challenge Traditional Manufacturing

2025-07-25 09:22:16.AIbase.

Ali Wan 2.2 is About to Make a Stunning Launch: Open-Source Video Generation AI Challenges Sora

2025-07-25 09:21:27.AIbase.

AI Video Memory Revolution Is Here! Memories.ai Secures $8 Million in Funding to Challenge the Limit of Analyzing Millions of Hours of Video

2025-07-25 09:21:24.AIbase.